Sklearnmetricscohen_kappa_scorey1 y2 labelsNone weightsNone this is the example found on the sklearn website. CTRL SHIFT F Windows F Mac Close Message.

Multi Class Metrics Made Simple Part Iii The Kappa Score Aka Cohen S Kappa Coefficient By Boaz Shmueli Towards Data Science

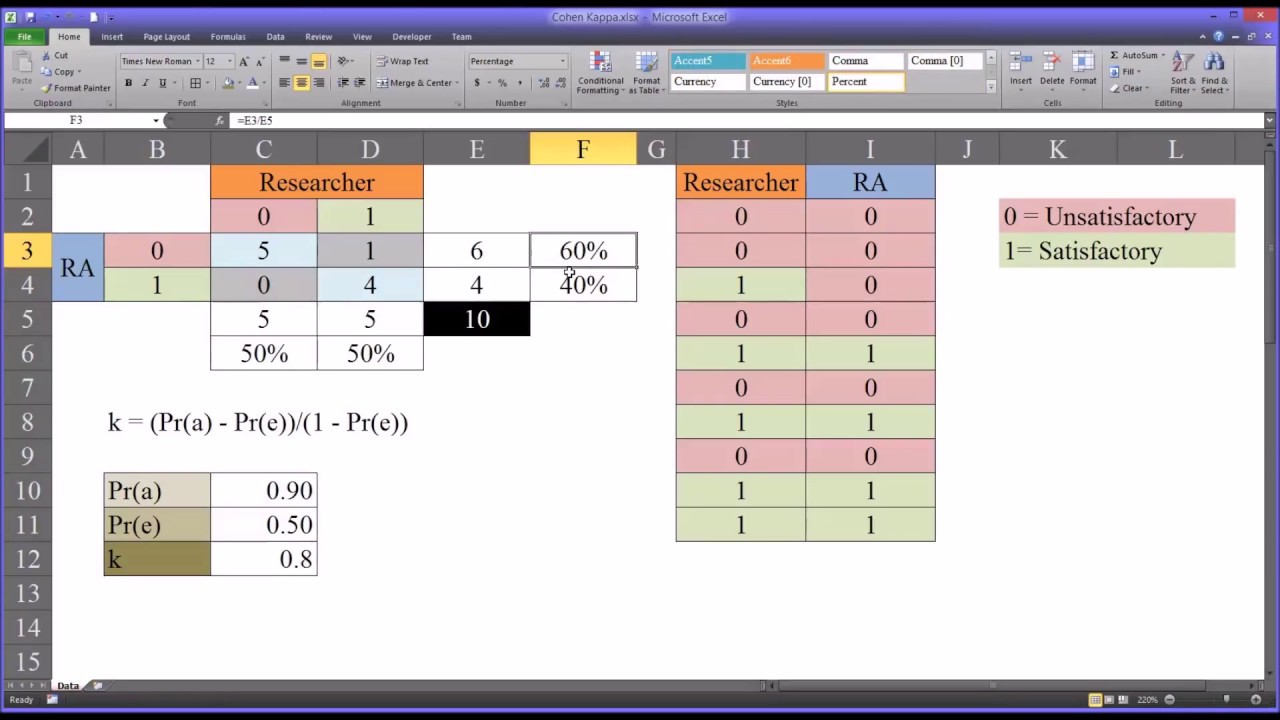

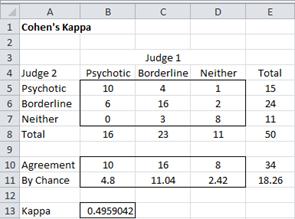

In order to calculate Kappa Cohen introduced two terms.

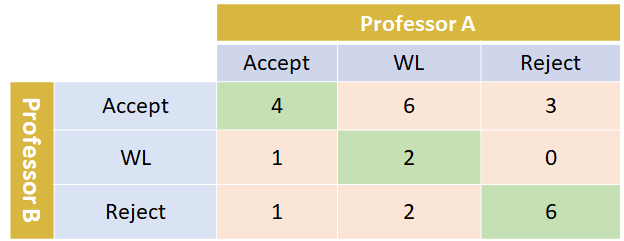

Cohen's kappa coefficient example. K p o p e 1 p e k 06429 05 1 05 k 02857. In our example the Cohens kappa k 065 which represents a fair to good strength of agreement according to Fleiss e al. If Agree is smaller than ChanceAgree the kappa score is negative denoting that the degree of agreement is lower than chance agreement.

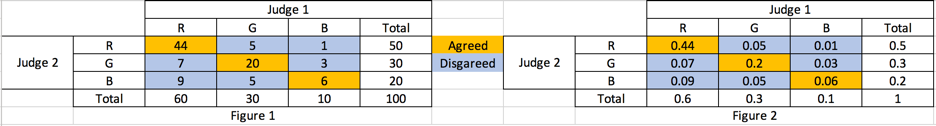

κ p o p e 1 p e where p o is the empirical probability of agreement on the label assigned to any sample the observed agreement ratio and p e is the expected agreement when both annotators assign labels randomly. Cohens Kappa Sample Size. If your ratings are numbers like 1 2 and 3 this works fine.

Back to our example. In general percent agreement is the ratio of the number of times two raters agree divided by the total number of ratings performed. Percent agreement and Cohens chance-corrected kappa statistic Cohen 1960.

It is defined as. P 0 relative observed agreement among raters. You are now able to distinguish between reliability and validity explain Cohens kappa and evaluate it.

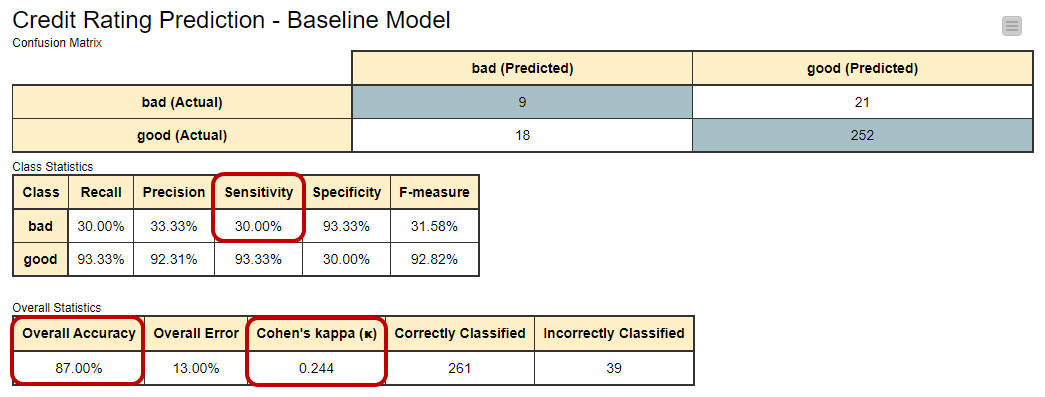

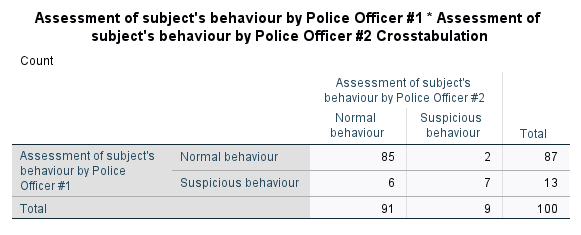

There was moderate agreement between the two officers judgements κ 593 95 CI 300 to 886 p 001. The value for Kappa is 016 indicating a poor level of agreement. This statistic is very useful although since I have understood how it works I now believe that it may be under-utilized when optimizing algorithms to a specific metric.

Returning to our original example on chest findings in pneumonia the agreement on the presence of tactile fremitus was high 85 but the kappa of 001 would seem to indicate that this agreement is really very poor. For example kappa can be used to compare the ability of different raters to classify subjects into one of several groups. Cohens Kappa coefficient formula.

A value of 1 implies perfect agreement and values less than 1. For our example this is calculated as. P e is estimated using a.

P 0 and p e are computed using the observed data to calculate the probabilities of each observer randomly saying each category. Kappa is always less than or equal to 1. Cohens Kappa coefficient _based sample size determination in epidemiology.

Youll notice that the Cohens kappa write-up above includes not only the kappa κ statistics and p -value but also the 95 confidence interval 95 CI. PYes 251070 251570 0285714. For this example the Fleiss-Cohen agreement weights are as follows.

Historically the Kappa coefficient is used to express the degree of agreement between two raters when the same two raters rate each of a sample of n subjects independently with the ratings being on a categorical scale consisting of 2 or more categories. P e the hypothetical probability of chance agreement. To display the kappa agreement weights you can specify the WTKAPPAPRINTKWTS option.

096 084 000 096 036 and 064. This is confirmed by the obtained p-value p 005 indicating that our calculated kappa is significantly different from zero. First we present an alternative but equivalent approach to calculating the standard error for Cohens kappa namely.

K p 0 p e 1 p e 1 1 p o 1 p e. In contrast if AgreeChanceAgree kappa is 0 signifying that the professors agreement is by chance. Where n sample size and In the case where k 2 we can express the standard deviation sd in terms of κ p1 and q1 where p1 is the marginal.

In an examination of self reported prescription use and prescription use estimated by electronic medical records. PNo 152070 102070 0214285. Lastly well use p o and p e to calculate Cohens Kappa.

Cohens kappa statistic κ is a measure of agreement between categorical variables X and Y. Before we dive into how the Kappa is calculated lets take an example assume there. The kappa statistic estimates the proportion of agreement among raters after.

SAS calculates weighted kappa weights based on unformatted values. Test kappa wtkap. In our case as we calculate shortly ChanceAgree is 03024.

041 060 moderate agreement. The following table was observed. In other words we expect about 30 of agreements to occur by chance which.

Tables Rater1 Rater2 agree. To get p-values for kappa and weighted kappa use the statement. An example of Kappa.

Measure rater agreement where outcomes are nominal. 021 040 fair agreement. 001 020 slight agreement.

The reason for the discrepancy between the unadjusted level of agreement and kappa is that tactile fremitus is. κ Pr a Pr e 1 Pr e Where Pra represents the actual observed agreement and Pre represents chance agreement. A second example of Kappa.

We now show how to calculate the sample size requirements in the case where there are only two rating categories. P e 0285714 0214285 05. 061 080 substantial agreement.

45 112 106 738. Calculation of Cohens kappa may be performed according to the following formula. Proc freq data ratings.

From sklearnmetrics import cohen_kappa_score y_true 2 0 2 2 0 1 y_pred 0 0 2 2 0 2 cohen_kappa_scorey_true y_pred. 081 100 almost perfect or perfect agreement.

Calculation Of The Kappa Statistic Download Scientific Diagram

Inter Rater Agreement In Python Cohen S Kappa Stack Overflow

Kappa Value Calculation Reliability Youtube

Data For Kappa Calculation Example Download Scientific Diagram

Interrater Reliability The Kappa Statistic Biochemia Medica

Kappa Coefficient For Dummies How To Measure The Agreement Between By Aditya Kumar Ai Graduate Medium

Calculating And Interpreting Cohen S Kappa In Excel Youtube

Cohen S Kappa Statistic Definition Example

Atlas Sopek Urediti How To Interpret Cohen S Kappa For Two Diagnostic Tests Stmichaelfitnessatlanta Com

Measuring Agreement With Cohen S Kappa Statistic By Blake Samaha Towards Data Science

The Accuracy And Cohen S Kappa Of The Confusion Matrix Example For The Download Table

Cohen S Kappa In Spss Statistics Procedure Output And Interpretation Of The Output Using A Relevant Example Laerd Statistics

Cohen S Kappa Real Statistics Using Excel

Descriptive Statistics Cohen S Kappa Coefficient K And Measures Of Download Table

Cohen S Kappa Free Calculator Idostatistics

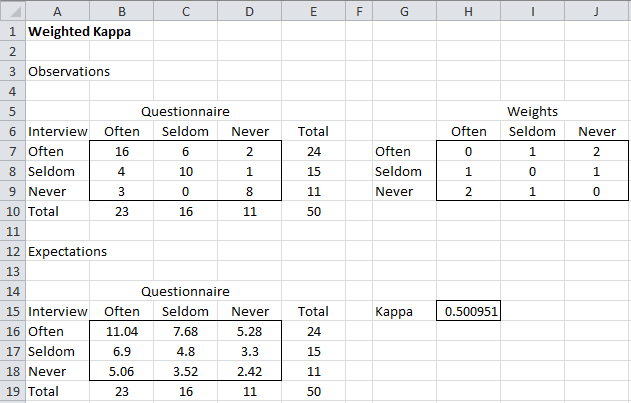

Weighted Cohen S Kappa Real Statistics Using Excel

Interrater Reliability The Kappa Statistic Biochemia Medica

Cohen S Kappa Sage Research Methods

0 comments:

Post a Comment